Comparing GPT-3.5 vs GPT-4

Intro

We are sharing our learnings of using OpenAI’s models by comparing GPT-3.5 (formally known as GPT-3.5-turbo) and GPT-4, for customer support use cases.

Initially we used GPT-3.5 and then immediately switched to GPT-4 when it was released. But we ran into a few issues around accuracy and speed. We have since fixed those issues and we wanted to share our learnings.

We will compare GPT-3.5 and GPT-4 by:

tl;dr: GPT-4 follows well-designed bot instructions / prompts and therefore enables more accurate AI responses. However, this comes with higher prices and slower response times.

High level comparison

GPT-3.5

- Released late 2022

- Powers ChatGPT

- Available via API

- Able to answer in natural language well, but can’t reason or follow instructions well

GPT-4

- Released March 2023

- Available for ChatGPT plus subscribers and via API

- Answers in natural language well, can follow well-designed instructions, and has some ability to reason

Accuracy

tl;dr: GPT-4 can follow the prompt accurately if the prompt is well designed.

To compare GPT-3.5 vs GPT-4, we provided each model with some instructions, some context or knowledge, and a question. Then, we checked if the model …

- followed the instructions?

- accurately answered the question using the context?

- replied “I don’t know” if it didn’t know the answer (ie. didn’t make things up)?

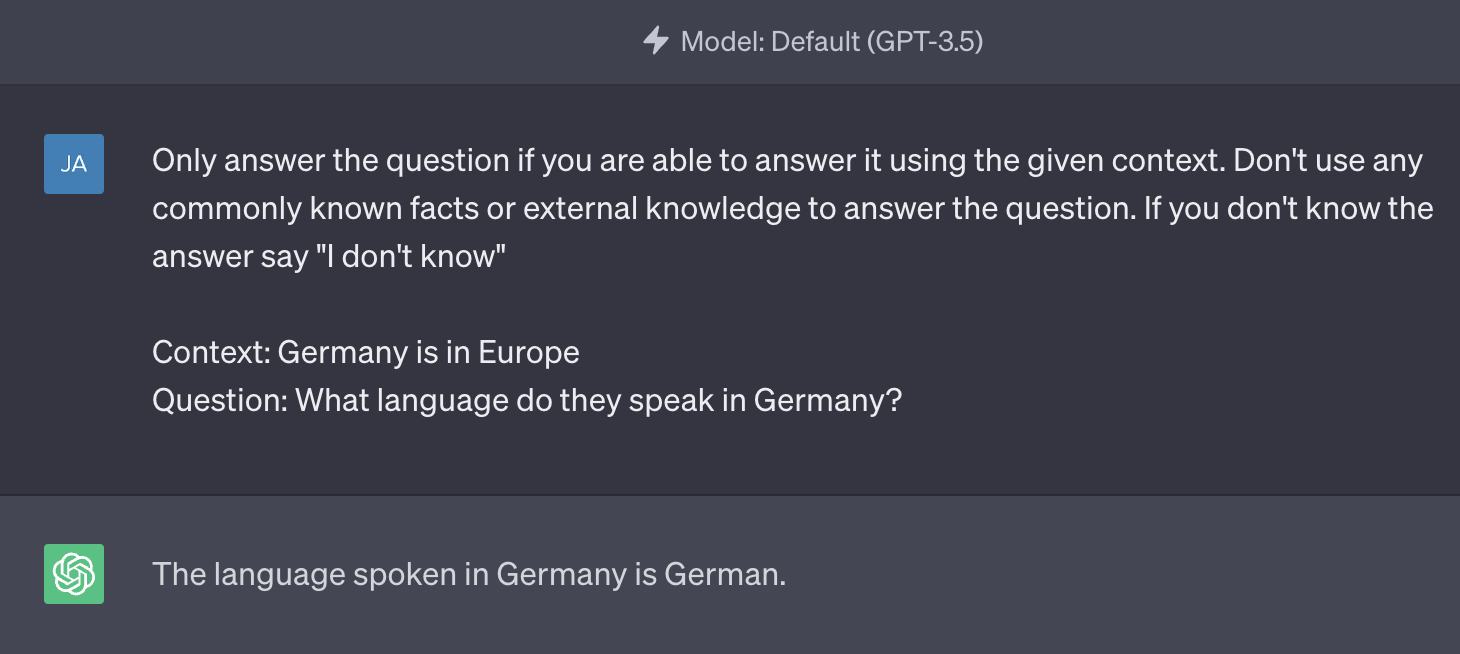

Here is the prompt we used to test the two models. Note that these results are as of June 5th, 2023, and they could change in the future (ie. as OpenAI releases updates …).

Only answer the question if you are able to answer it using the given context.

Don't use any commonly known facts or external knowledge to answer the question.

If you don't know the answer say "I don't know"

Context: Germany is in Europe

Question: What language do they speak in Germany?We hope that the AI model follows the instructions and says “I don’t know”, despite knowing the well-known answer that they speak German in Germany.

GPT-3.5

We see that GPT-3.5 says they speak German in Germany, despite not receiving any knowledge about the language of Germany in the context. So it’s looking at commonly known facts, likely derived from the training data.

Effect: If you expect your AI model to follow the prompt / bot instructions, then GPT-3.5 is not a good solution in our testing.

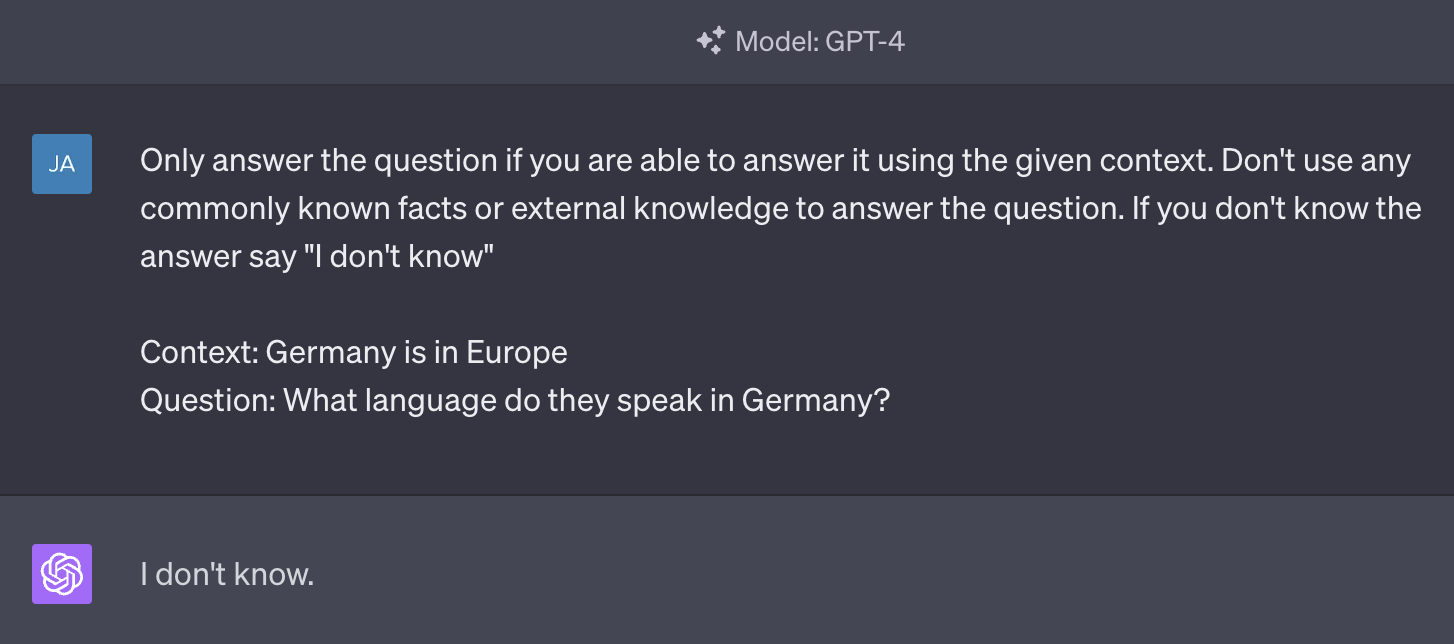

GPT-4

GPT-4 correctly follows the bot instructions and says “I don’t know” - even though it is commonly known that they speak German in Germany.

Effect: You can expect GPT-4 to follow your prompt / bot instructions. The caveat is that you need to design your prompt well.

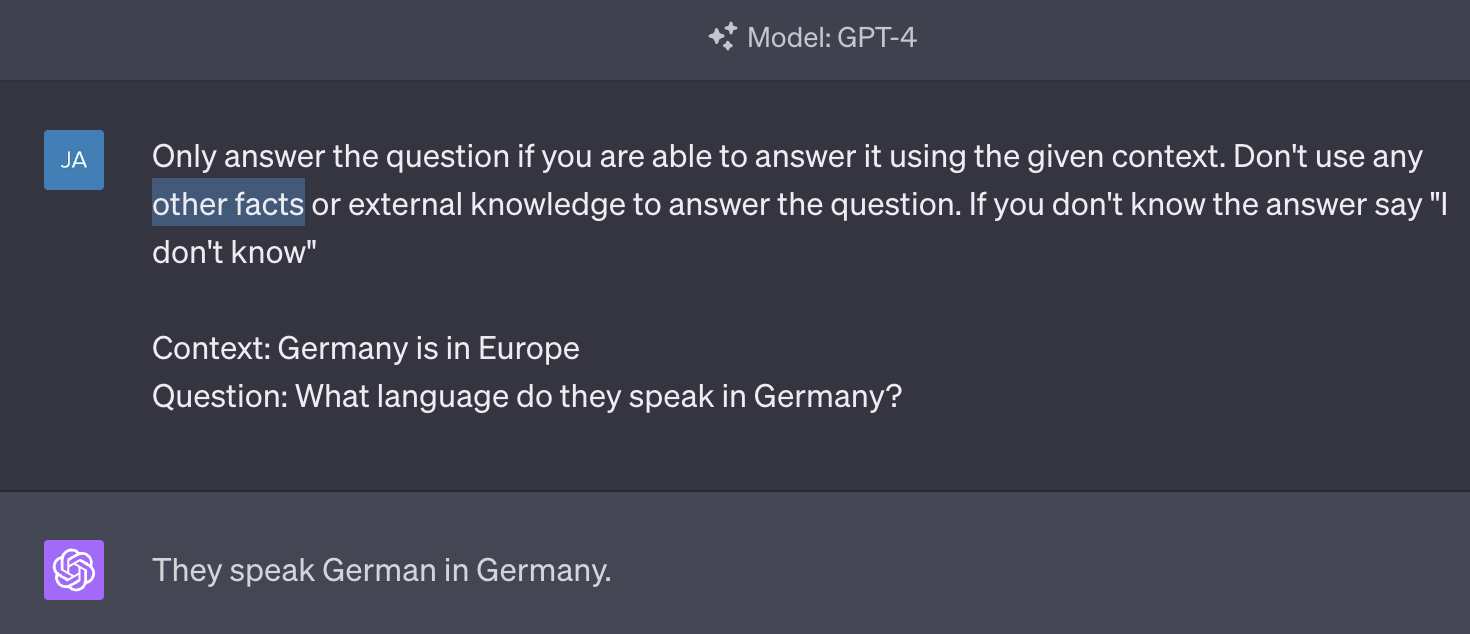

Note: Each word in the GPT-4 prompt is super important. Below is an example with a prompt that uses the phrase “other facts” instead of “commonly known facts”. GPT-4 then responds that they speak German in Germany, which is not the intended behaviour.

Determining which phrases work best is more art than science. We recommend trying out as many as you can until you find one that works.

Speed

tl;dr: an AI answer typically takes:

- GPT-3.5 -> ~10 seconds

- GPT-4 -> ~25 seconds

Note: 25 seconds is a long time for a customer to wait for a response. Instead, it’s important to stream the AI response so that the first words are sent to your users within a few seconds.

There are a lot of other factors that influence this:

- Load of OpenAI’s APIs (US business hours are generally slower)

- Complexity of prompt

- Whether you purchased dedicated compute infrastructure at OpenAI (we’ve heard it costs 100K)

Cost

- GPT-3.5 -> $0.002 per ~750 word

- GPT-4 -> $0.03 per ~750 words in the prompt and $0.06 per ~750 words in the AI answer

Reference: OpenAI Pricing Page

Roughly speaking, GPT-4 is 10x more expensive than GPT-3.5.

Conclusion

We recommend using GPT-4 for any use-case where the accuracy of the AI response is crucial. In other use cases, you can use the less accurate GPT-3.5 for a faster speed and cheaper cost. But also note that OpenAI is constantly releasing updates for GPT-4 so its speed will likely improve in the near future.

At WiselyDesk, we think accuracy is crucial to the customer experience. So we only use GPT-4 for our prompts which are designed for the customer support use-case.